Scalable software architecture for terminal operations

Architecture and Software Architecture

First, define architecture in context. In container operations, architecture shapes how systems handle flows, rules, and hardware. The word covers topology, protocols, and how components connect. Software architecture then becomes the plan for those moving parts. It sets the boundaries for services, data stores, and APIs. It guides choices that affect throughput, latency, and fault handling. For example, a design that places compute close to data will reduce network hops and speed critical user journeys.

Software architects must balance goals. Core drivers include performance, reliability, and maintainability. Performance requires clear SLAs for response times and short paths for critical messages. Reliability demands retries, health checks, and resilience patterns. Maintainability needs readable modules, tests, and simple communication between teams. These constraints affect the software architecture and the technical architecture decisions you make.

As Martin Fowler put it, “The important decisions in software development vary with the scale of the context that we’re thinking about” — a short reminder that decisions early on shape future options. Martin Fowler, Software Architecture Guide. Therefore, architects should adopt approaches that let teams evolve systems without rewriting core logic. This fits modern software engineering and supports iterative software development.

Also, consider how a company like Loadmaster.ai fits. Loadmaster.ai trains RL agents in a digital twin and integrates with existing TOS via APIs. That approach benefits from an architecture that isolates control agents from telemetry ingestion. It reduces dependency on historical data and keeps business logic auditable. For further reading on planner tooling and explainable models see our piece on explainable AI for planners: explainable AI for container port planners.

Finally, build in observability and design for change. Use dashboards that show critical paths. Add unit tests for business logic and integration tests for critical user journeys. These steps make it easier to optimize later and to keep systems that scale manageable.

Scalability and Scalability Challenges

Scalability means the system can grow without collapse. You can scale vertically by adding stronger machines, or horizontally by adding more nodes. Each approach has trade-offs. Vertical scaling gives immediate capacity by increasing CPU or memory. Horizontal scaling spreads load across multiple instances to improve redundancy and throughput. Plan both vertical scaling and horizontal scaling so you can adapt to different peaks.

Workloads often spike. For example, some ports handle more than 10 million TEUs per year, which translates to millions of transactions per day and sharp bursts of telemetry. Research on Large-Scale Systems. Network links must carry high volumes; AI-driven analytics may require speeds above 100 Gbps to sustain real-time decision loops. TE Connectivity on AI data center connectivity.

Key scalability challenges include peak traffic, real-time processing, and hardware integration. Peak traffic can create hotspots and a single bottleneck can cause a cascading outage. Integration with specialized hardware, like RTGs, AGVs, or crane controllers, requires robust edge gateways and stable protocols. While handling amounts of data, systems must also honor data retention policies to meet compliance and auditing needs.

Plan capacity with clear metrics. Use transactions per second, concurrent sessions, and data ingress per minute. Also track response times and queue depths. Perform load tests that mimic busy periods and edge-case spikes. For security and logging, adopt centralized pipelines that can process terabytes daily; this mirrors what enterprise SIEM solutions handle at scale. Scalability in Log360.

To overcome these challenges, keep systems modular, add caches where needed, and partition workloads across multiple regions. Also, design for failure: expect partial outages and make recovery automatic. These steps reduce downtime and help operators focus on planning rather than firefighting.

Drowning in a full terminal with replans, exceptions and last-minute changes?

Discover what AI-driven planning can do for your terminal

Monolith and Monolith to Microservices

Many legacy operations run on a monolith. A monolithic app often bundles UI, backend, and integration code in one deployable unit. That works early on. However, as traffic grows, the monolithic design causes performance issues and slows teams. A single database or heavy shared dependency becomes a bottleneck. Teams suffer long deploy cycles and increased outage risk when one change affects the entire system. If you face inconsistency in outcomes across shifts, that often ties back to an overburdened codebase.

A structured path to a microservices approach begins with domain decomposition. Identify bounded contexts and map critical user journeys. Then extract services one by one, starting with low-risk functions. For example, split billing and reporting from cargo tracking. Use API gateways to present unified endpoints while services evolve independently. This staged migration reduces disruption and keeps operational continuity.

During the monolith to microservices transition, follow clear steps: define contracts, create versioned APIs, and set up automated tests. Keep a single source of truth for schemas during migration and use feature flags to control rollouts. Also adopt event buses to decouple changes; a lightweight message broker can forward domain events while you refactor the backend.

Best practices minimize user impact. Maintain backward-compatible APIs, deploy multiple instances for new services, and monitor traffic to identify new bottleneck patterns. Use a cache for frequently accessed data and prefer asynchronous flows for non-critical updates. Where possible, run experiments in a sandboxed environment and leverage a digital twin to validate decisions before live deploys. See our article on integrating optimization layers with existing TOS for practical integration patterns: integrating TOS with AI optimization layers.

Finally, retain operational guardrails. Keep rollback plans and ensure teams can revert without database churn. A successful migration requires both technical discipline and strong coordination between operations and development.

Microservices and Distributed Architectures

Microservices promote loose coupling and independent deployments. Teams can ship features faster, isolate faults, and scale specific services to meet demand. Core principles include single responsibility per service, small APIs, and independent data stores where appropriate. This fosters resilient systems and clearer ownership.

In distributed architectures, patterns that have proven include service discovery, circuit breakers, and load balancing. Service discovery ensures that services find each other dynamically. Circuit breakers protect the system by failing fast when a downstream service degrades. Load balancers distribute traffic across multiple instances to prevent overload and reduce bottleneck formation.

When you build microservices, plan for communication between services carefully. Use lightweight protocols for synchronous calls and an event bus for asynchronous flows. For guaranteed delivery and complex routing, include a broker such as RabbitMQ to handle bursts and retries. Implement idempotent handlers to avoid duplicate side effects.

Concrete examples help. A cargo tracking service can publish location events. A scheduling service can consume those events and adjust quay or yard plans. A billing service can listen to completed moves and generate invoices. These services can scale independently, so the tracking service can run many more compute instances during peak arrivals while billing runs at steady capacity.

Design each service with health probes and tracing. Distributed tracing is essential for diagnosing slow paths across multiple services and for spotting hidden latency. Use centralized logging and a dashboard that highlights critical operations to speed incident resolution. To explore how AI can reduce yard congestion and balance moves, read our analysis on reducing yard congestion with AI: reducing yard congestion with AI.

Keep one eye on costs. Distributed systems multiply endpoints and data transfers. Use partition strategies to localize data and limit cross-region chatter. Also implement a small cache for frequently accessed reference data and choose databases tailored to each service’s needs.

Drowning in a full terminal with replans, exceptions and last-minute changes?

Discover what AI-driven planning can do for your terminal

CQRS and Event Sourcing

Separate reads from writes with CQRS. Command Query Responsibility Segregation splits write models from read models. This allows optimizing each side independently. For writes, enforce strong validation and transactional integrity. For reads, tune for fast queries and precomputed projections. CQRS supports complex workflows and high read volumes without slowing writes.

Pair CQRS with event sourcing to retain an immutable log of state changes. Every change becomes an event in an append-only store. That design gives audit trails and replayability. You can rebuild projections by replaying events, which helps with recovery, analytics, and compliance. Event sourcing stores should include clear data retention policies to control growth and cost.

For terminal-like operations, the benefits are tangible. Event logs enable state projection in real-time, resilience to partial outages, and clear traceability for planning decisions. They also support experimentation. For example, Loadmaster.ai trains RL agents in simulation and can apply policies safely in production while events record decisions and outcomes for later review.

Implement event buses and durable storage with familiar tools. Use PostgreSQL for relational needs and append logs when you need strong consistency. For fast caches of projections, use Redis to serve frequently accessed data. For messaging, RabbitMQ can ferry events between services reliably. These components let you scale read models independently and maintain a single stream of truth.

Finally, adopt clear tooling and operational rules. Set up data retention policies, use partitioning to keep event stores manageable, and add monitoring that detects long replays or inconsistency between projections and the source of truth. CQRS and event sourcing together can help build systems that scale gracefully and meet audit requirements. The combined pattern is sometimes summarized as cqrs and event sourcing in modern designs.

Deployment and Scaling

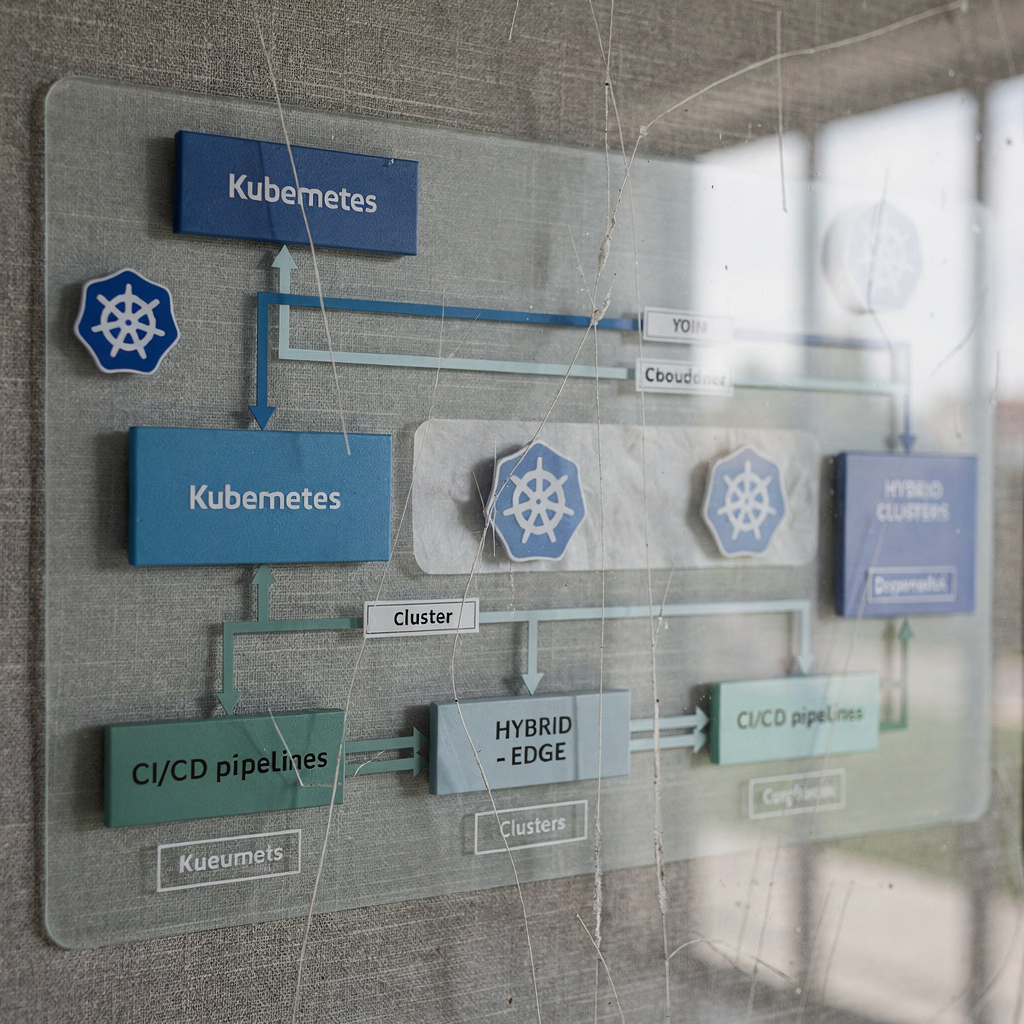

Choose deployment targets that match operational needs. Containers give consistency, and Kubernetes provides orchestration to manage multiple instances, scheduling, and self-healing. Serverless functions can handle bursty tasks and reduce overhead for sporadic workloads. Each model has trade-offs: containers offer control; serverless offers fast spin-up and pay-per-use billing.

Infrastructure as code unlocks repeatable setups and safer roll-outs. Use IaC to define networks, security groups, and compute resources in version control. This practice reduces configuration drift and simplifies disaster recovery. When you automate provisioning, you reduce manual error and speed up time to test.

Autoscaling policies should follow real metrics. Scale based on CPU, queue length, or custom business KPIs. For workload bursts, scale out across multiple instances rather than vertically increasing size. Also consider edge computing to run time-sensitive agents closer to equipment that needs instant responses. Edge computing nodes can preprocess telemetry and reduce pressure on centralized cloud servers.

Test autoscaling with realistic load tests and fault injection. Run chaos tests to simulate outages and ensure retry logic handles transient failures. For stateful services, coordinate deploys so you avoid split-brain scenarios. Use blue/green or canary deploy strategies to reduce risk on production systems.

Observability is critical after deploy. Track metrics, logs, and distributed traces. Set alerts on error budgets and SLA breaches. Use dashboards to show health across regions. Also integrate continuous build systems with your pipelines so you can run unit tests for business logic and integration tests for critical flows before you deploy. For capacity planning tied to operations, see our scenario-based capacity optimization research: scenario-based capacity optimization.

FAQ

What is the difference between architecture and software architecture?

Architecture refers to the overall structure and relationships of components in a system. Software architecture specifically focuses on how code, services, and data stores are organized and interact.

When should a company move from a monolithic system to microservices?

Consider migration when deployments slow, outage risk increases, or teams block each other frequently. Start by splitting low-risk bounded contexts and validate in a sandbox before large rollouts.

How does CQRS help with read-heavy workloads?

CQRS separates read models from write models so each can be optimized independently. This reduces contention and improves read performance under heavy loads.

What role does Kubernetes play in containerized deployments?

Kubernetes orchestrates containerized applications and manages multiple instances, scaling, and self-healing. It helps teams automate rollouts and maintain continuous availability.

How can event sourcing improve auditability?

Event sourcing records every state change in an immutable log that you can replay. This gives a clear audit trail of decisions and supports forensic analysis after incidents.

What measures reduce the risk of outages during migration?

Use feature flags, canary deploys, and rollback plans to limit blast radius. Also keep backward-compatible APIs and run heavy tests in a sandbox before production deploys.

Which tools support high-throughput messaging?

Options include proven brokers like RabbitMQ for reliable delivery and stream platforms for very high-volume needs. Choose based on durability, latency, and operational familiarity.

How do I choose between vertical scaling and horizontal scaling?

Use vertical scaling for quick increases in capacity on single nodes. Use horizontal scaling for redundancy and long-term growth because it distributes load across multiple instances.

What are practical first steps for improving scalability?

Start with clear metrics and load testing to identify bottlenecks. Then add caching for frequently accessed data, partition large datasets, and adopt autoscaling policies.

How can AI agents like those from Loadmaster.ai integrate with existing systems?

AI agents can run in a sandbox digital twin and then deploy with operational guardrails. They integrate via APIs and work alongside TOS solutions to improve planning and reduce rehandles without replacing core systems.

our products

stowAI

stowAI

stackAI

stackAI

jobAI

jobAI

Innovates vessel planning. Faster rotation time of ships, increased flexibility towards shipping lines and customers.

Build the stack in the most efficient way. Increase moves per hour by reducing shifters and increase crane efficiency.

Get the most out of your equipment. Increase moves per hour by minimising waste and delays.

stowAI

stowAI

Innovates vessel planning. Faster rotation time of ships, increased flexibility towards shipping lines and customers.

stackAI

stackAI

Build the stack in the most efficient way. Increase moves per hour by reducing shifters and increase crane efficiency.

jobAI

jobAI

Get the most out of your equipment. Increase moves per hour by minimising waste and delays.