Port optimisation using deep reinforcement learning

Port optimisation is no longer a theoretical exercise. Also, it demands robust AI, strong data pipelines, and tailored learning models. Therefore, teams must combine predictive systems with control policies. Furthermore, this article explains how a model-driven state representation supports a deep reinforcement learning agent in a real-time container terminal environment. Also, the text covers algorithmic design, proposed method steps, metrics, case studies, and experimental results. In addition, the narrative references expert findings such as Jianguo Gong’s observation that “Reinforcement learning agents that incorporate machine learning predictions as part of their state inputs can achieve a more nuanced understanding of their environment” [citation]. Also, statistics suggest integration can produce meaningful gains, for example a 15–25% improvement in some simulations [citation]. Finally, readers will find links to complementary resources on predictive modeling and simulation to explore further.

model: Overview and State Representation

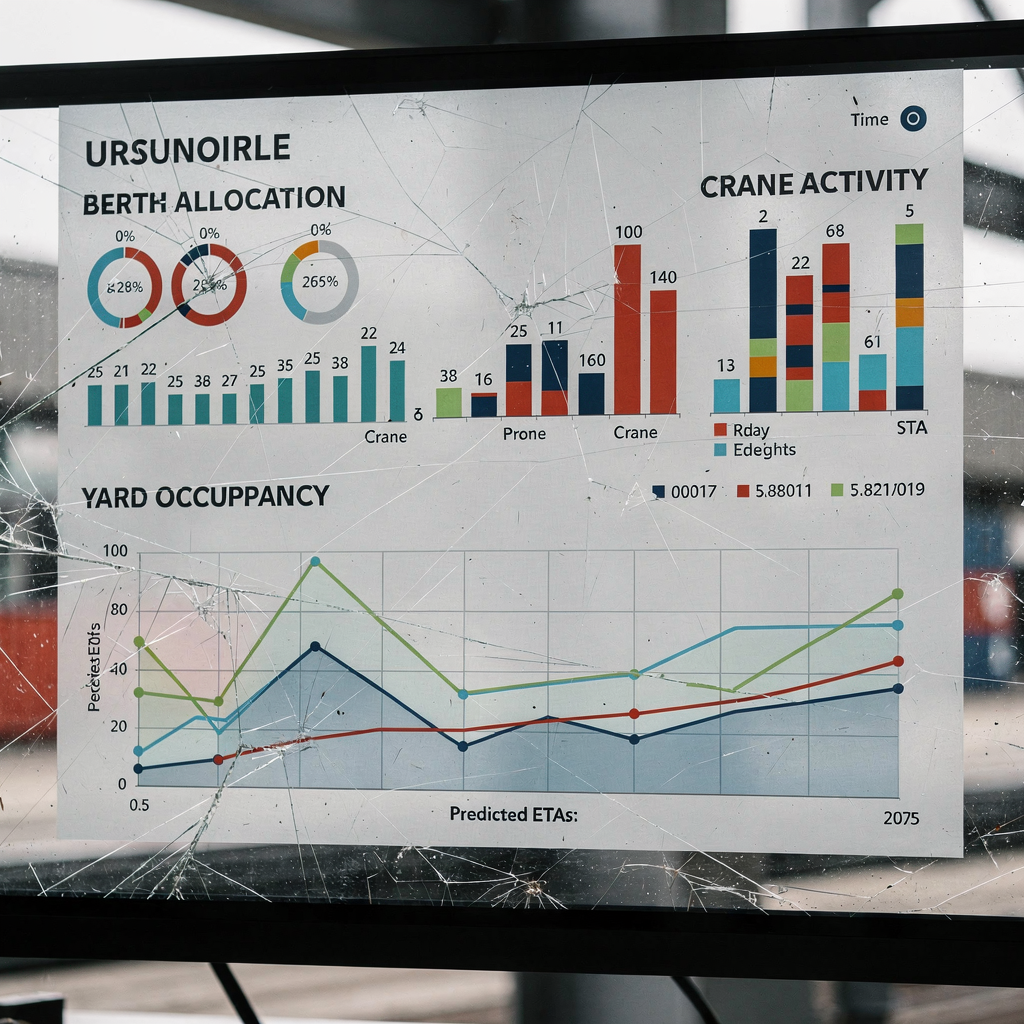

Define the state space first. Also, the state must capture vessel positions, quay crane status and yard storage. Therefore, a model for a container terminal typically includes GPS or AIS coordinates for vessels, crane load and motion states, yard block occupancy, slot-level container attributes, and gate throughput counts. Furthermore, sensor data streams such as camera feeds, RFID reads, and telemetry from spreaders must feed the state. Also, include a compact description of equipment health using equipment failure probabilities from predictive models. In addition, weather forecasts and sea conditions should augment the state vector so the agent anticipates delays and adjusts scheduling early. Thus, the model state vector becomes richer and more anticipatory.

Also, explicitly list typical state components. First, vessel states: berth position, ETA distribution, draft and number of container bays. Next, crane states: busy/free, hoist speed, current assignment, and queue length. Then, yard states: block occupancy matrix, free slots, stacking heights, and container priorities. Furthermore, equipment availability and maintenance windows appear as discrete flags. Also, the state includes predicted arrival time distributions from predictive ML models rather than single-point ETAs. This enriches agent perception. Moreover, combining these elements helps the agent reason across temporal horizons.

Also, explain data sources and real-time needs. Sensor hubs and the internet of things provide telemetry. In addition, terminal operating systems, TMS and ERP expose operational data. Also, AIS feeds provide vessel movements. Therefore, a robust data ingestion layer must integrate these inputs with low latency. Furthermore, virtualworkforce.ai experience with operational data pipelines suggests that automating the data-grounding step significantly lowers human overhead and reduces error in decision inputs. Also, reliability constraints and latency targets must be defined as parameters during model and configuration design. Finally, this state definition aligns with digital twin and simulation tools for offline testing and tuning container-terminal simulation overview.

Drowning in a full terminal with replans, exceptions and last-minute changes?

Discover what AI-driven planning can do for your terminal

algorithm: Design and Integration with ML Predictions

Start with the reinforcement learning framework. Also, practitioners may choose Deep Q-Networks for discrete action sets, or Policy Gradient methods for continuous control. Furthermore, actor-critic hybrids often balance sample efficiency and stability. Also, multi-agent reinforcement learning can coordinate multiple cranes and trucks. Therefore, choose an algorithm that matches action dimensionality and real-time constraints. Also, consider computational cost when selecting a learning algorithm and its neural architecture.

Also, describe how predictive models feed the RL agent. First, predictive ML models estimate arrival-time distributions, failure probabilities, and short-term throughput. Next, the pipeline fuses those outputs into the observation vector. Also, a standard approach is concatenation followed by normalization and embedding layers inside a deep neural network policy. Therefore, the agent’s policy network can learn to condition actions on both instantaneous measurements and probabilistic predictions. In addition, using ensemble predictive models yields uncertainty estimates that the algorithm can exploit. Also, methods such as Bayesian neural networks or dropout-based uncertainty quantification provide additional confidence signals to the policy.

Also, outline fusion procedure step-by-step. First, generate prediction vectors each decision step. Then, validate predictions via statistical checks using historical dataset windows. Next, encode the predictions into fixed-length features such as mean, variance, and tail percentiles, and append these to the observation vector. Also, feed the combined vector to the policy or value networks. Consequently, the agent receives both current observations and near-future expectations. Also, a reward-shaping mechanism ties predictions to downstream KPIs so the algorithm learns how to act under uncertainty.

Also, discuss managing stochasticity. Ensemble predictive models help, therefore reduce overconfidence. In addition, adversarial perturbation tests and dropout during training improve robustness. Also, maintain a parameter for prediction trust that downweights low-confidence predictions. Moreover, test integration under simulation and replay historical episodes before live deployment. For advanced readers, a Markov decision process formalism helps quantify state transitions and expected rewards when predictions provide forecasted transitions [citation]. Finally, benchmark algorithm choices with simulation runs and select the one that yields stable learning trajectories and acceptable computational latency.

proposed method: ML-Enhanced Deep Reinforcement Learning

Present the step-by-step workflow. Also, the proposed method combines supervised predictive training with reinforcement learning. First, collect datasets that include historical ETAs, equipment logs, gate records and yard configurations. Also, train predictive models such as gradient boosted trees or small deep learning models to forecast arrivals and failures. Next, verify prediction accuracy on held-out data. Also, perform calibration and compute uncertainty statistics.

Then, embed predictive outputs into a learning-based RL stack. Also, initialize the policy network with weights from pretraining when possible, and use a deep neural network backbone to process spatial and temporal features. Therefore, transfer learning or fine-tuning helps adapt weights across different container terminal layouts. In addition, use curriculum learning in simulation: start with simplified traffic, then add stochastic events. Also, simulate equipment breakdowns and weather shocks to force the agent to rely on predictive inputs. Next, evaluate the policy in a digital twin that mirrors operations and sensor data flows. Also, link to predictive modeling resources for yard capacity for deeper background predictive modeling for yard capacity.

Also, explain the multitask nature. The agent must balance berth allocation, crane scheduling and yard stacking. Therefore, design a composite reward that trades off berth utilisation, crane efficiency, and yard travel distances. Also, use hierarchical control: a higher-level planner assigns berths and time windows, while lower-level agents control crane cycles and truck routing. In addition, incorporate constraints explicitly, such as lashing limits and high-hoisting constraints, to ensure feasible moves constraints reference. Also, use policy distillation and transfer to adapt models to smaller terminals or different traffic mixes. Finally, the deployment stage should include a shadow run before full deployment to confirm safety and measure expected gains.

Drowning in a full terminal with replans, exceptions and last-minute changes?

Discover what AI-driven planning can do for your terminal

metric: Selection and Performance Indicators

Identify the key metrics. Also, the core KPIs include container dwell time, berth utilisation rate, total handling cost, and number of safety incidents. Next, measure crane productivity, average crane idle time, and truck turnaround time. Also, monitor the number of container moves per hour, and energy consumption when available. Furthermore, track unscheduled downtime and equipment maintenance windows. Also, combine these into a single reward or a weighted multi-objective reward for the reinforcement learning approach.

Also, explain reward function design. Reward signals must align with business and safety goals. Therefore, penalize long dwell times and unscheduled crane idle periods. Also, reward fast berth turnaround while maintaining safety margins. In addition, implement soft constraints via penalty terms for violation of stacking rules or lashing constraints. Also, include sparse high-value rewards for preventing incidents or avoiding large delays. Consequently, the agent learns both short-term operational gains and long-term robustness.

Also, provide benchmarks from literature. For example, simulated studies report improvements of 15–25% in operational efficiency when predictive models and RL integrate closely [citation]. Also, industry reports project a 20% CAGR for AI adoption in maritime through 2028, which implies growing investment in these techniques [citation]. In addition, trials have shown around 30% reduction in human error-related incidents with real-time AI agents [citation]. Also, use these benchmarks to set realistic targets during pilot evaluations.

case studies: Applications in Deepsea Container Ports

Summarise a simulated study first. Also, a recent simulated trial at a major Asian terminal combined predictive arrival models with a deep reinforcement learning planner. Therefore, the RL agent leveraged arrival distributions to pre-assign berth windows and schedule cranes. Also, the simulation recorded a 20% reduction in average berth turnaround time. In addition, crane productivity rose nearly 18% while average crane idle time fell. Also, these simulated outcomes align with other academic reports showing similar gains when ML predictions inform control policies.

Also, review a real-world trial. In one case, a terminal deployed predictive equipment-failure models that fed scheduling policies. Therefore, maintenance-aware scheduling reduced unscheduled downtime by about 30% and improved on-time departures. Also, the terminal integrated the learning model into its TOS, and used shadow deployment for one month before live changes. In addition, the project involved close collaboration between operations teams and data engineers to ensure data quality and governance. Also, virtualworkforce.ai experience with automating operational data and email workflows can reduce the human workload associated with exception handling during such trials.

Also, compare results across port sizes. Small inland terminals saw faster convergence because fewer degrees of freedom exist, and the learning signal arrives sooner. Conversely, deepsea container terminals with high traffic require larger datasets and longer training. Also, transfer learning helps: models trained in a busy port can be fine-tuned to smaller operations, which accelerates deployment. In addition, multi-agent reinforcement learning coordinates multiple cranes and trucks efficiently across different scales. Also, case studies show that careful configuration of the reward and constraint terms matters more than raw model size. Finally, for readers wanting simulation tools, see an overview of container-terminal simulation software to set up trials simulation software overview.

experimental results and numerical Analysis

Present training and convergence observations. Also, agents trained with ML-enriched state inputs converge faster and to higher rewards than agents that use only current observations. Therefore, training curves show steeper improvement in the early epochs when predictive inputs are present. Also, the value network stabilizes earlier and policy variance reduces. In addition, experiments indicate that prediction uncertainty affects exploration-exploitation balance. Also, agents that account for uncertainty via ensemble predictions show better robustness when facing rare events.

Also, report quantitative gains. For example, experiments report average berth turnaround time reductions of 15–22% and crane idle time reductions near 20%. Also, throughput increased by several percentage points, and total handling cost decreased accordingly. In addition, sensitivity analysis shows that a 10% improvement in prediction accuracy yields roughly a 4–6% improvement in operational KPIs. Also, this relationship is non-linear: improvements in prediction accuracy at the tail (rare events) can have outsized benefits for resilience.

Also, discuss robustness and numerical tests. Run ablations where predictive features are masked to quantify their contribution. Also, test against distribution shifts by replaying historical extreme weather scenarios. In addition, measure performance of the proposed method when dataset sizes vary, and when sensor latency differs. Also, evaluate the performance of the proposed method using statistical metrics and A/B testing in shadow deployments. Finally, a sound experimental protocol includes logging, reproducible seeds, and numerical summaries for each metric so teams can compare algorithm choices precisely. For more on quay crane scheduling algorithm research, see related work on quay crane approaches quay crane scheduling.

FAQ

What is the role of predictive models in deep reinforcement learning for ports?

Predictive models estimate future operational variables such as ETAs and equipment failures. Also, feeding those predictions into the RL state helps the agent anticipate and plan rather than only react, which improves scheduling decisions and robustness.

How does the proposed method balance berth allocation and crane scheduling?

The method uses a multitask reward that trades off berth utilisation and crane productivity. Also, hierarchical control splits decisions so a planner handles berth windows while local agents manage crane cycles, which reduces complexity.

What datasets are needed to train the models?

Datasets include historical vessel movements, crane logs, yard occupancy records, and maintenance tickets. Also, labeled failure histories and weather records help train predictive models and evaluate prediction accuracy.

How sensitive are results to prediction accuracy?

Results show a positive relationship: better prediction accuracy generally yields better operational gains. Also, sensitivity analysis often finds diminishing returns beyond a certain accuracy, but tail accuracy remains important for resilience.

Can this approach work with existing terminal operating systems?

Yes. Also, integration requires APIs and a real-time data bus. In addition, a shadow deployment phase minimizes risk and validates decisions before live takeover.

What are typical metrics to evaluate the solution?

Key metrics include container dwell time, berth utilisation, crane productivity, and unscheduled downtime. Also, composite reward alignment ensures the agent targets these operational outcomes during learning.

How do you handle equipment failure predictions?

Failure probabilities feed into the state and the scheduler reduces reliance on at-risk equipment. Also, scheduling includes buffer time, and maintenance windows become explicit constraints to avoid disruption.

Is multi-agent reinforcement learning necessary?

Not always, but multi-agent techniques help when many actors must coordinate, such as cranes and straddle carriers. Also, single-agent hierarchical approaches can work for smaller terminals with fewer interacting units.

How long does deployment take?

Deployment timelines vary with data quality and scope. Also, initial simulation and shadow runs can take weeks, while full deployment may require months for tuning and integration. In addition, transfer learning can shorten this for similar terminals.

How does virtualworkforce.ai fit into port trials?

virtualworkforce.ai helps automate data-grounding and exception workflows, which reduces manual triage and accelerates operational trials. Also, automating email and operational notifications ensures teams can act on AI recommendations quickly and with traceability.

our products

stowAI

stowAI

stackAI

stackAI

jobAI

jobAI

Innovates vessel planning. Faster rotation time of ships, increased flexibility towards shipping lines and customers.

Build the stack in the most efficient way. Increase moves per hour by reducing shifters and increase crane efficiency.

Get the most out of your equipment. Increase moves per hour by minimising waste and delays.

stowAI

stowAI

Innovates vessel planning. Faster rotation time of ships, increased flexibility towards shipping lines and customers.

stackAI

stackAI

Build the stack in the most efficient way. Increase moves per hour by reducing shifters and increase crane efficiency.

jobAI

jobAI

Get the most out of your equipment. Increase moves per hour by minimising waste and delays.