event-driven system fundamentals: need an event, events as first-class citizens and container terminal context

Container logistics operate under tight timing and shifting priorities. The parts of the system include cranes, AGVs, yard management, gate operations and vessel planners. In this container terminal environment, coordinating these system components with low friction matters. Traditional request-response architectures often struggle under sudden load. Therefore teams move toward an event-driven approach to reduce coupling and speed decisions.

An event-driven system treats occurrences as the unit of work. Events represent a change in state or a measurement from sensors. In practice you need an event to signal that a new container arrived, a crane finished a move, or a truck entered the gate. Events allow services to decouple. Services publish events and other services subscribe. This approach routes events through an event bus or broker so consumers react asynchronously. As a result, services scale independently and operators see faster actions.

When teams adopt the mindset that events are first-class citizens they design flows around event taxonomy, event schemas and event delivery guarantees. Events as first-class citizens shifts control from asking for status to reacting to change. Systems process real-time updates instead of polling endpoints. This transition reduces needless API traffic and improves observability. For further reading on gate throughput and how terminals measure delay, see the practical strategies in our guide to container-terminal gate optimization explained (container terminal gate optimization).

Also, teams often pair event-driven with microservices to gain modularity. This pairing preserves autonomy. It helps avoid single points of failure. For terminals seeking data-driven operational gains see our piece on predicting yard congestion in terminal operations (predicting yard congestion). Finally, companies like virtualworkforce.ai use events to automate email notifications and to convert inbound messages into actionable events, so manual triage drops dramatically and workflows close faster.

real time event flow: eda for real-time event and data processing

Real-time event flows power operational tempo. First, a sensor or operator emits a new event. Next, that event travels to a broker or event bus. Then, interested services consume and act. This sequence creates an elastic pipeline for real-time data and command signals. EDA principles stress minimal coupling, immutable event records and idempotent consumers. In practice, terminals stream telemetry from yard management, crane controllers and gates as real-time data to central processors.

Event-driven patterns enable both event and stream processing. Teams often combine pub-sub for notifications with stream processing for aggregation and analytics. For example, a stream job can compute yard density across stacks and then publish alerts for reassigning cranes. Studies show event-driven approaches cut latency in IoT scenarios by about 30–40% when compared to conventional REST models (analysis of event-driven APIs). Also, research on microservice systems reports throughput improvements up to 5× during peaks when using event-based designs (cloud-based event-driven design).

Further, real-time analytics drive decision loops. Teams can detect congestion, reroute trucks and adjust crane schedules within seconds. The result is lower dwell times and higher throughput. For terminals focused on crane productivity and scheduling, our work on optimizing quay crane productivity shows how analytics and events align (optimizing quay crane productivity).

Also, EDA reduces pressure on request-response architectures by avoiding synchronous calls for state. Instead, services listen for relevant events. This change improves responsiveness across distributed systems and supports volumes of real-time data at scale. For terminals adopting cloud services and containerized stacks, the design enables event streaming to be resilient and efficient.

Drowning in a full terminal with replans, exceptions and last-minute changes?

Discover what AI-driven planning can do for your terminal

build an event-driven architecture: apis, event driven patterns and microservices

To build an event-driven architecture in a modern stack start with clear API contracts and messaging schemas. First, define what a new event contains and how receivers validate it. Next, map event types to topics, and design schemas with versioning. Use tools that validate schema at runtime. Also, document the design event-driven apis so integrators can send events correctly. Good contracts reduce errors and simplify upgrades.

Then select patterns. Publish/subscribe supports broad distribution of notifications. CQRS separates command handling from read models for low-latency queries. Use pub-sub and event streaming together where needed. A queue can buffer bursts and protect downstream services. Also, design idempotent consumers and include event version metadata so you can evolve formats without breaking subscribers. This approach helps decouple producers and consumers and thus improves scalability and fault isolation.

When you deploy into Docker and Kubernetes clusters, package microservices into lightweight docker container images and manage them with orchestration. Configure horizontal pod autoscaling to handle fluctuating container terminal workloads. The microservices model lets teams scale read models differently from command processors. Consequently, systems can handle large volumes of events without bogging down critical flows.

Also remember security and governance. Authenticate event producers and encrypt payloads in transit. Track event delivery and retention for audit. For additional implementation patterns that touch scheduling and stowage, read about automated container terminal stowage replanning software (stowage replanning).

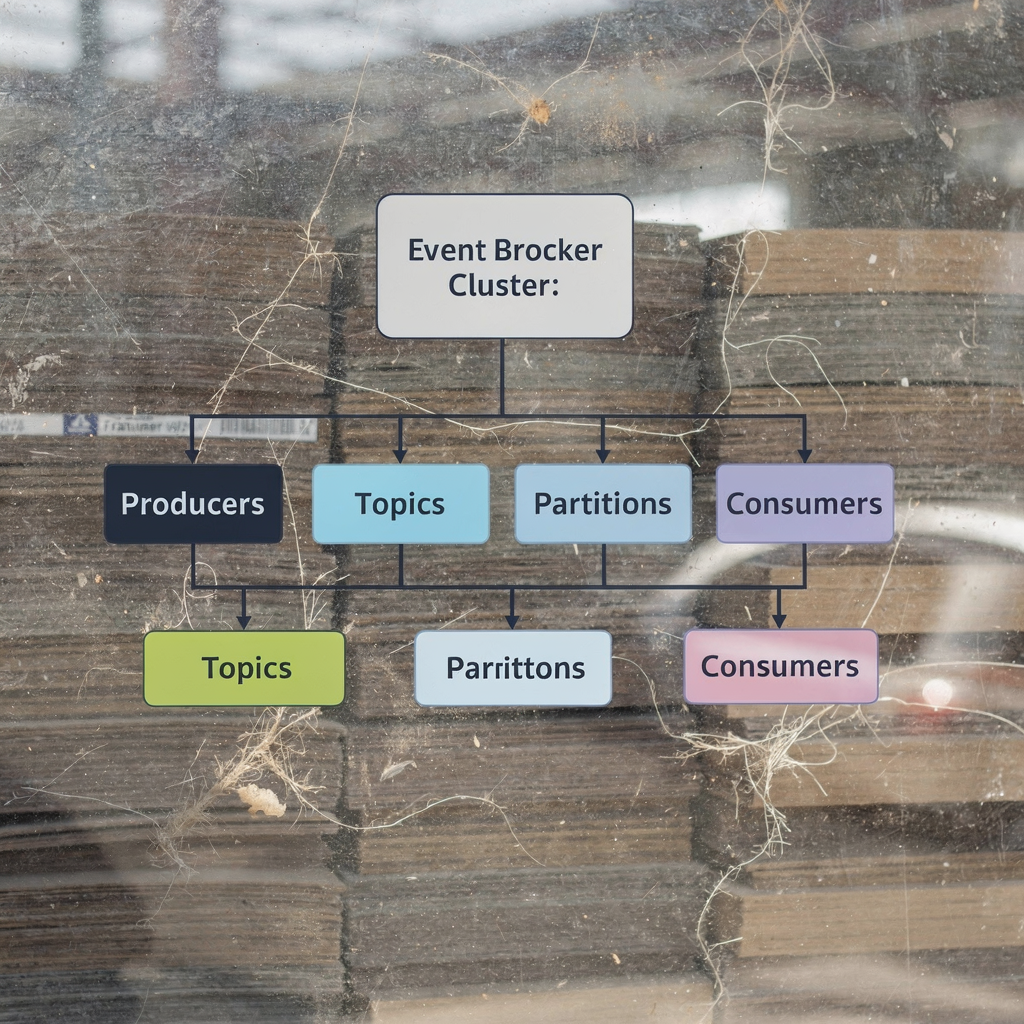

implementing event-driven architecture: apache kafka as event broker and broker design

Implementing event-driven architecture often centers on choosing a robust event broker. Many teams include apache kafka as the backbone because it supports persistent logs, partitioning and high throughput. Kafka provides stream processing primitives and integrates with connectors to legacy systems and cloud services. In production, kafka helps systems handle tens of thousands of events per second and retain event history for replay. For broker choice, evaluate latency, durability and ecosystem support.

When designing a broker topology consider topic partitioning and replication. Partition keys control ordering and parallelism. Replication factors support fault tolerance. Also, set retention windows and compaction rules based on data processing needs. For exactly-once delivery semantics, leverage transactional producers and idempotent consumers. These measures reduce duplicate processing and reduce the risk of inconsistent state in downstream services.

Design broker clusters for high availability. Use multi-cluster replication or mirror makers to span data centers. Include monitoring and alerting for lag and failed partitions. Additionally, integrate the broker with terminal operating systems via connectors or an event mesh so legacy TOS systems can send and receive events reliably. When integrating with older stacks, middleware adapters translate TOS messages into standardized event schemas.

Also, choose patterns for event routing. For some flows a lightweight event bus handles notifications. For heavy analytic workloads use dedicated topics for event streaming and stream processors. Finally, include dry-run and replay strategies to validate changes. For an overview of microservices potential in IoT and event streaming, review research on microservices in IoT (microservices in IoT).

Drowning in a full terminal with replans, exceptions and last-minute changes?

Discover what AI-driven planning can do for your terminal

api design and benefits of event-driven architecture for terminal operations

Good api design matters in event-driven solutions. First, model each event schema with clear fields and constraints. Then apply idempotency keys so retries do not cause state corruption. Also, implement schema versioning and backward compatible changes. Secure event channels with strong authentication and encryption. Use tokens or mTLS and validate producers before they can send events. These best practices reduce attack surface and maintain trust in event delivery.

The benefits of event-driven architecture are measurable. Studies report latency reductions of roughly 30–40% in comparable IoT scenarios, which translates to faster gate processing and quicker crane handoffs (microservice real-time IoT study). Systems also record throughput gains up to 5× during peaks when moving from synchronous calls to event flows (throughput improvement study). Error rates on resilient platforms often stay under 5% under heavy load (Dockerized endpoint management).

Operationally, this leads to reduced dwell times and improved yard utilisation. For example, events that trigger dynamic equipment pool allocation let terminals match resources to demand in seconds. That capability improves terminal operations and reduces truck turnaround. To explore related scheduling and allocation strategies, see our analysis of dynamic equipment pool allocation based on real-time demand in container terminals (dynamic equipment pool allocation).

Finally, design api endpoints that accept events and expose read models for queries. Keep query performance high by using precomputed views and caching. This design preserves low latency for operator dashboards and automations, enabling teams to react to volumes of real-time data smoothly.

use case: eda in terminal operating systems

This use case shows an integration of event-driven approaches into terminal operating systems. A terminal linked its TOS to an event broker and started routing events when trucks arrived, when cranes completed lifts, and when yard stacks moved. The TOS published standardized messages that downstream analytics consumed. Then, a set of microservices updated assignment rules and notified human operators of exceptions. The flow reduced manual handoffs and sped up decision loops.

Middleware played a central role. Adapters translated legacy TOS messages into the event taxonomy and ensured that events are published in a consistent schema. This middleware also handled authentication and performed schema validation. The design reduced friction when connecting third-party carriers and external systems. As a result, the terminal avoided reengineering its older platforms while gaining modern event delivery capabilities.

Key outcomes included lower crane idle time, better yard density control, and fewer gate backups. Metrics tracked during rollout matched published gains: lower latency in data processing and increased throughput during peak shifts. In our experience, integrating event-driven systems with email automation agents, like those from virtualworkforce.ai, helps close the loop on operational messages. The agents can parse inbound emails, transform them into structured events and route them to the correct service or human operator. That integration reduces email triage and speeds response for exceptions.

Finally, lessons learned emphasized governance, schema evolution and thorough testing. Teams who succeed adopt a complete guide to event-driven architecture and plan for replay strategies, disaster recovery and gradual cutovers. For migration-specific guidance, consider resources on data consistency and cutover planning for TOS migrations in container terminals (TOS migration planning).

FAQ

What is an event-driven architecture and how does it differ from request-response architectures?

An event-driven architecture organizes work around events that describe changes in state. In contrast, request-response architectures require services to ask for information and wait for replies. This shift enables systems in real time to react without tight coupling, which improves responsiveness and flexibility.

Why should a terminal consider implementing event-driven?

Because event-driven approaches reduce latency and improve throughput during peaks. They let processors scale independently and help terminals handle large volumes of real-time data more reliably.

How do you choose a broker for event streaming?

Choose a broker based on throughput, replication, latency and ecosystem connectors. Many teams include apache kafka for high-throughput use cases, while others evaluate cloud services or managed offerings from aws for easier operations.

Can legacy terminal operating systems work with event-driven systems?

Yes. Middleware adapters translate legacy messages into standardized events and ensure compatibility. This strategy reduces the need for a full TOS rewrite during the transition to event delivery models.

What are key api design best practices for events?

Design idempotent operations, apply schema versioning and secure channels with proper authentication and encryption. Also, document design event-driven apis and use validation at the broker to prevent malformed messages.

How do microservices support scalability in terminal environments?

Microservices split responsibilities so teams can scale critical services independently. With container orchestration, terminals allocate resources where demand spikes and maintain steady performance when event volumes grow.

What metrics should terminals track after deploying an event-driven architecture?

Track latency, throughput, error rates and processing lag. Also monitor business metrics like gate throughput, crane utilization and yard dwell time to link technical changes to operational outcomes.

Is exactly-once delivery required for terminal operations?

Not always, but many critical flows demand stronger guarantees. Use transactional producers and idempotent consumers when duplicated actions would cause incorrect state or costs. Elsewhere, at-least-once may suffice with proper deduplication.

How do events improve analytics and decision-making?

Events provide continuous streams of truth that analytic engines can consume in real time. This enables rapid detection of congestion and immediate corrective actions, improving operational KPIs.

What common pitfalls should teams avoid when building event-driven systems?

Avoid unclear schemas, weak governance and insufficient testing of replay and recovery. Also ensure your broker topology supports partitioning and replication so you can maintain availability under load.

our products

stowAI

stowAI

stackAI

stackAI

jobAI

jobAI

Innovates vessel planning. Faster rotation time of ships, increased flexibility towards shipping lines and customers.

Build the stack in the most efficient way. Increase moves per hour by reducing shifters and increase crane efficiency.

Get the most out of your equipment. Increase moves per hour by minimising waste and delays.

stowAI

stowAI

Innovates vessel planning. Faster rotation time of ships, increased flexibility towards shipping lines and customers.

stackAI

stackAI

Build the stack in the most efficient way. Increase moves per hour by reducing shifters and increase crane efficiency.

jobAI

jobAI

Get the most out of your equipment. Increase moves per hour by minimising waste and delays.